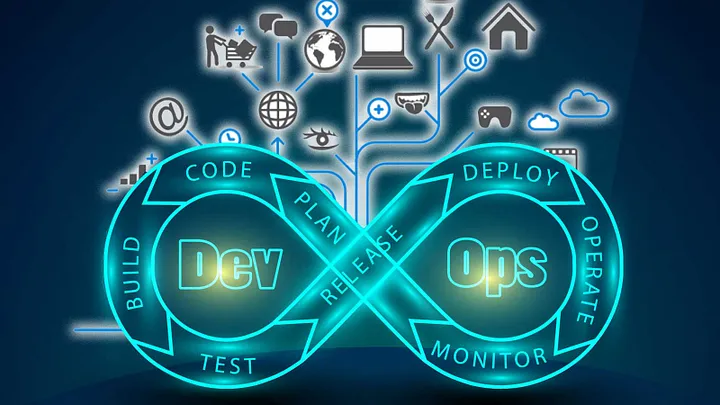

Over the last decade, development of software has changed dramatically. It is almost hard to fathom the days of releasing software every six months. Today, many leading companies are deploying code to production hundreds of times per day. This has changed the way we view the testing of applications. Automated Testing is the centerpiece of Agile, without it teams cannot gain the full potential of what Agile can provide. Unfortunately, this is an area where many organizations attempting to adopt Agile struggle the most. The reason for this is simple, development tests are different than QA tests. Various types of tests are run within different environments. Continuous Delivery and DevOps has given the ability to blur the lines between these concepts in production which has proven to be a very powerful thing. DevOps is the next step in the Agile evolution.

Definition of DevOps

Basically, DevOps is a good solution for improving what developers do. Testing in an environment similar to today’s infrastructure is crucial, and ultimately, we hope that developers want to know whether the application is running well or not.

With DevOps, it becomes easier to provide direct feedback to the development team regarding any kind of problems that arise. Ultimately, the development team is responsible for the application, and the operations team can learn from these cases on how to solve application problems in the future.

DevOps serves as a bridge or a perfect process for delivering what the customer wants. Through step-by-step migration or understanding from the silo culture between the development team and the operations team, the development team can now think about how to build the application in an awesome and great way. However, it is unfortunate that when the application is delivered to the operations team, there are often problems experienced by the operators in operating the application. These problems can be due to various reasons, such as the application not being compatible with the infrastructure, having bugs, or lacking monitoring.

DevOps is a cultural movement that promotes good communication and collaboration between the development and operations teams, using automation. In the IT world, we are already familiar with agile and ITIL (The IT Infrastructure Library), which are best practices for managing applications. However, the results of these practices or methodologies can be a little bit disappointing because there is a lack of understanding between the development results and the real process of operating it in the data center.

DevOps is a set of practices that automate and integrate the processes between software development and IT teams, so that they can build, test, and release the software faster and more securely.

Benefits of DevOps

The implementation of DevOps creates an environment for continuous testing and development. It is said that the quicker an error is found, the cheaper it is to fix. Continuous testing can uncover more errors throughout the development phase, and these can be fixed quicker. As development and operations are working together more often, it is creating better understanding from both sides. This can lead to development making more operations-friendly software and better operational deployment. Development is better understanding the implications of software changes. In doing this, DevOps has the potential to provide software with fewer defects and a quicker resolution if defects are found during operations.

DevOps focuses on operations and development occurring simultaneously. An example of this would be a developer creating some software working immediately with an operations team to deploy it in a test environment. This method focuses on creating smaller, more manageable pieces of work and delivering it quickly. Increased efficiency and productivity can result in a greater ROI for the business and can free up resources to work on other projects.

Improved collaboration between development and operations teams DevOps brings single teams together leading to better collaboration. This is often achieved by creating “cross-functional” teams consisting of developers, testers, analysts, and IT operations. These teams will work together to deliver software in small packages. In doing this, it breaks the “us and them” mentality which is often found between development and operational teams.

One of the major benefits of DevOps is a significant increase in software delivery speed. It provides an environment with better communication and collaboration between development and operational teams that are traditionally siloed operations. By improving deployment frequency, DevOps can provide the business with a faster time to market, which is a huge competitive advantage.

Faster software delivery

It’s a well-known fact that DevOps helps organizations improve their software delivery so they can be more efficient, innovative and push changes through more quickly. And in the high-stakes game of software development, being faster and more efficient is a game changer. As Patrick Debois, the “Godfather” of the first DevOps conference says, “If you have to wait 6 months for infrastructure, you’re toast.” One of the CAMS principles is about Systems Thinking; and a fundamental concept in systems thinking is the feedback loop. The longer the feedback loop, the longer it takes to course correct. Creating, testing, and releasing software can be automated and repeated with great efficiency to shorten and amplify the feedback loop. As a result, you can spend a lot less time fixing mistakes, and more time creating additional value. Mike Kavis, VP of Cloud Technology Partners claims “One of the primary benefits of DevOps is being able to get to market faster and iterate more”.

Improved collaboration between development and operations teams

Software developers and IT operations will need to work together in order to build a successful software. Let’s just jump on an example, a software operations initiate a new project to build an application for banking system. The application needs to be able to fulfill all customer needs for the current banking system. The banking system itself has a feature where customers can transfer their money to another account. The operations team must explain in detail what the requirements are for the application. The dev team must understand all the requirements for the application and build the application with the given requirements. If there is a new build of the application finished, the dev team must give the build to the operations team to do a test. If there are bugs or errors happening when the operations team does a test, the dev team must fix the application until there are no errors and the application is running smoothly. At the final step, the application is handed over by the dev team to the operations team, and if there are additional requests for the application, the dev team must take the request and fix it. This is a suitable example of software development and IT operations working together. This is the foundation of DevOps.

Increased efficiency and productivity

All of these software development and operations improvements provided by DevOps will result in quicker and more reliable software delivery.

On the operations side, there are also significant improvements. Through automation, infrastructure can be deployed several times to numerous different stages, such as development, testing, and production, with little or no mistakes. This kind of on-demand and automated infrastructure can test the software in an effective way compared to the traditional method.

This kind of software delivery phase also determines the productivity of the whole process from plan to release. The high frequency of software deployment can dramatically impact the software delivery process. Each deployment of the software will add or improve the software functionality, and developers can get real feedback on the software as early as possible. This means that any flaws, defects, or design errors can be repaired early in the process.

Traditional software development and infrastructure management processes can be laborious and time-consuming. QA teams have to wait until the development process is done to start their work and check if the software meets their standards. If it doesn’t, developers have to go through the code, search for the error, fix it, and the cycle repeats. Infrastructure management teams also have to wait until the operations are done to see how the software will work. Even the slightest misconfiguration of infrastructure can affect the software’s performance.

This back and forth communication about the software is inefficient and can be decreased in terms of time and manpower by utilizing DevOps methodologies.

Enhanced quality and reliability of software

An example of the transformative power of DevOps comes from the company Adobe. Their implementation of DevOps saw a huge increase in build and test completion time, from 15 days to 5 hours. This resulted in more thorough testing with quicker feedback, allowing developers to focus on fast resolution of any problems. Adobe also has an impressive 58% reduction in serious bugs when using a phased deployment versus a traditional method. This is sustainability in maintenance of reliability as less problems means less work on fixes and features can be worked on. Adobe managed to sustain this high quality software by using the same tools and processes throughout the whole software lifecycle. This bridging of the gap between development and IT operations is a core concept of DevOps and this will be discussed further in the next section.

High performing teams have been proven to be more reliable under the DevOps methodology. High performers are spending 22% less time on unplanned work and rework, and time to resolve incidents is 50% faster. This also shows in availability. High performers are spending 50% more time on new features/functionality, and this is supported by having 99.99% server availability. DevOps culture also supports failing fast and often. This may sound counterproductive, but it increases reliability. By learning from small failures, a system can be made more resilient in the long run.

Quality and reliability of software have always been a primary concern of businesses when delivering applications to their end users. DevOps tackles this issue through its cultural philosophies and automation which it encourages. By automating repetitive tasks, deployment, scaling and testing can all be performed unattended. DevOps engineers will then have more time to work on new features and bug fixes. This in turn accelerates the time between fixes. When a developer makes a new version of a feature or a bug fix, that change will be in production in a short amount of time. This small change can then be tested in the production environment to assess the change.

Key Principles of DevOps

The eight practices within the CAMS acronym are:

- Develop a culture and environment where building, testing, and releasing software can happen rapidly, frequently, and more reliably.

- When there is an understanding of shared goals where everyone involved from developers to operations are all working towards the same objective, there can be a better understanding of the process involved in reaching the goal.

- By automating the processes anywhere from development to production, it can increase the reliability of steps taken to accomplish the goal.

- This can enable testing to be done continuously and allow for fixes or changes to be done at any point.

- This can ultimately result in a higher quality product as well as less time taken.

- A factory where automation is being constantly used. This allows for simplified maintenance, a consistent environment, and a product that can be easily repeated.

- By automating an environment, there is less risk of human error, allowing more time for other important tasks.

- The environment and product can also be easily repeated with no deviation from a more successful formula. This results in it being easier to maintain and troubleshoot the product, reducing time taken to do so.

DevOps primarily focuses on four basic principles that serve as the cornerstones of the system. These principles are intended to create a mindset focused on increased deployment frequency, lower failure rate of new releases, shorter lead time between fixes, and a faster mean time to recovery. These principles are:

- culture,

- automation,

- measurement,

Continuous Integration (CI)

Continuous Integration is the practice of frequently integrating new or changed code into the existing codebase. The goal here is to not allow feature branches to diverge too far from the trunk. By doing this, you can help avoid many integration pitfalls.

Continuous Integration relies on test automation, but it is not solely a test automation system. It is better described as a set of behaviors often implemented using UI and unit tests. The more of these tests that can be run the integration system, the more integration sins you can catch.

For example, consider a project which has no CI, integration is done on a monthly basis. After 3 weeks of integration, John decides he’ll fix the annoying bug which has been affecting the foo widget. His changes are a complete disaster, but he doesn’t notice this until 2 hours into the changes when the system state is completely screwed.

At this point, he’s wasted 2 hours of his time. With CI using very frequent integration, it’s likely that he could have made the same changes and discovered they were harmful on the same day and he could have only wasted 10 minutes. The shorter the time frame between changes and bug discovery, the easier the bugs is to fix.

So a successful CI implementation should look to have lots of very short-lived task/feature branches coming off the trunk and being reintegrated often. An often quoted rule is that if something is painful and you don’t like doing it, you should do it more often and CI is no exception to this rule. At this point, a successful CI-er will be thinking “but if we’re always merging back into the trunk, we’re going to be spending all our time fixing bugs!” and this is indeed a common reaction. However, John’s changes to the foo widget are a perfect example of a hidden bug which would have caused real trouble had it been left to manifest at a later date.

To integrate properly, you must first have a good enough test suite. How good is good enough is a matter of personal choice.

The basic CI rule here is that if you aren’t confident in your software’s deployability, you should not be committing it to the trunk. If you do, you’ll likely be thrown into the world of “integration pain”, this is not fun. In the worst-case scenario, no testing will be done and it will be down to the poor developers and your unfortunate customers to discover what exactly is broken. At the other end of the scale, there are some superstitious developers who claim that code which has not been tested manually works better than code which has, and therefore advocate the deletion of the test suite and all known copies of The Way Of Test.

Continuous Delivery (CD)

It is aligned with continuous integration (CI). In continuous delivery (CD), the code will be built, tested, and then released to production. The main purpose is to prepare for the release of new changes to the system. CD practice is to release every single change to the system that passes the building phase. It can make the deployment and testing phase easier.

In a simple deployment, sometimes we forget what we have built in the system, and then we try to remember it or see it from the code. By releasing every single change to the system, we can see what we have built directly. In software testing, it is divided into two methods. The first is testing each change to the system, known as Test Driven Development (TDD), and testing the whole system. Usually, it is just the same as the development phase.

Many changes to the testing method, but we need to remember that the best way to deliver every single change to the system is the most appropriate way for testing. To apply CD, there must be automation. If everything is done manually, it will release a high probability of failure to the deployment because humans tend to forget. The automation in CD is a self-tested code that can be released to production, or known as the self-tested deployment phase. Usually, it is just the same as the testing deployment into a different server to avoid mistakes in deploying a code into the production environment.

Infrastructure as Code (IaC)

Infrastructure as Code is one of the most important and often overlooked parts of what DevOps can accomplish. The idea that you can script all the necessary elements for your production environment is incredibly powerful. Being able to version and iterate on these scripts means that you can scale your environments more reliably, with less risk, and at a lower cost. This, in turn, means that you can scale your production environment to match your scaling user base, as opposed to over-provisioning earlier on to account for future growth.

Additionally, as all environments should be identical in IaC, this means testing can be done very early on in the development cycle. On the same scripts used to launch an environment, unit and integration tests can be run to test the very things those environments will later be hosting. The idea that environments can be disposable and completely interchangeable is a very important part of both scaling and high availability. Treating servers and even whole data centers as replaceable parts is an alien concept to many, but doing so can provide a massive benefit. Ten servers which each need to be individually fixed if a hardware fault occurs are far less expendable than 100 identical virtual servers which can be thrown away and replaced at a moment’s notice. This also ties in with cost-effectiveness, as on-demand resources can be treated more like utilities, with users only paying for what they consume.

Automation

Among the general principles, in the principles of DevOps, there is a sandbox element which is about automation. Many people have experienced a manual task to configure a server, deploy applications, or test something that is not efficient enough and wastes a long time. Using automation in the DevOps process is very important to reduce human error, increase efficient use of time, and reduce the risk of manual mistakes.

An example of automation that could be used is Puppet or CFEngine. These tools will help system administrators configure servers and maintain configurations. Puppet and CFEngine themselves will help system administrators write a desired state of a server and automatically configure the server to that state. These tools can guarantee that the server is always in the desired state and reduce misconfigurations. The steps to automation in the DevOps process are very simple, like just making a script, running the script, and versioning and testing the automation script just like code. So it will be a good practice for developers before they automate their deployment process.

Challenges and Solutions in Implementing DevOps

Lack of IT skills is one of the challenges faced by the industry. It includes the lack of knowledge and insufficient training. This problem is also joined together with the increasing cost of IT education nowadays. The issue of escalating education costs is affecting the people’s ability to reach new professional heights. Another problem is experienced by IT graduates. In contrast with the increasing cost of education, most fresh graduates still find it difficult to get their new jobs due to the lack of experience as well as specific skills required by the industry.

The most commonly cited problems faced when going to DevOps being a “Resistance to Change” from Operations, creating a potential conflict as developers are trying to automate their jobs away, which makes ops feel marginalized. This can also be due to the change in the skill sets required, with an emphasis on new infrastructure automation tools in place of older legacy systems. There’s also the issue of Operations and Development Silos, where it has been traditional for these groups to work in isolation. When that isolation is removed by integrating Development and Operations in a DevOps culture, handover of new applications and features can become a painful point. By unifying the two teams and working from a single agile or lean methodology, each team will gain a greater understanding of the others challenges. An excellent way to build understanding and break down any remaining silos is to use The Three Ways as a discussion framework in identifying the constraints to improvement of the value stream of the IT system.

Resistance to change and cultural barriers

So what kind of resistance would a movement so well founded have to contend with? Well, as it turns out, there are a myriad of reasons why individuals and companies might be disinclined to switch over to DevOps, despite the touted benefits. Some employees might fear that their jobs or their skill sets would become obsolete, and this is not an entirely irrational fear—IT will change to some degree, and there are some traditional operational or infrastructural IT roles that may not carry over.

A recent HP presentation on DevOps went so far as to suggest retraining developers as the solution to the Ops/Dev divide, but in reality this may not be the best course of action for the individuals or the company. High automation and a better understanding of broader system interactions albeit with less time spent firefighting, suggest that developers will need to have a better functional understanding of systems with less of the previous trial and error expedient—ultimately this might change the way software is developed, but it might not mean developers are the best systems administrators for their own applications. This presentation disturbingly suggests that SysAdmins would make better developers, though this may be to some degree true, it might be preferable for them to develop their skill-set within the development teams. This might simply mean a change in the function within existing teams, and might not affect these individuals as developers at all.

The agility and end result of breaking down the walls and silos between traditionally separated elements of IT business and development might mean more focus on a blend of roles and more jack-of-all trades IT populace at large. This theoretical future paradigm differs from present day reality, and is an unsettling transition to have hanging over one’s head and might scare some IT workers away. But it would be incorrect to suggest that it’s only low on the totem pole IT workers who fear job loss that may take issue with DevOps. In the siloed landscape of traditional IT companies, there are professionals who have grown to be pillars in their spheres of influence. They may be the best in the business at a particular element of IT, but might not feel that they are equipped to handle a wider array of complex duties that DevOps would have them take on.

Another type of resistance might take the form of a certain skepticism of the new movement, found in those who have seen many IT management methodologies and systems come and go. This form of resistance can be seen as a wait and see approach, and is exceedingly common for larger companies with deeply entrenched infrastructure and legacy software that is costly to change. These individuals and companies might not contest the effectiveness of DevOps as a tool to manage brand new software from inception to end of life, but may not feel that there is a feasible way to apply DevOps methodology to existing software without massive interruption or high migration costs.

This sentiment is partially echoed in the Agile Manifesto’s value stating that “Responding to change over following a plan… that is, while there is value in the items on the right, we value the items left more”. This is not to say the Manifesto itself is bad for Agile or DevOps, as both embody the spirit of these comments in the most ideal implementation. Finally, it’s not difficult to find an older enterprise IT worker who would claim “we’ve been doing what’s now called DevOps for years and it was just called good IT”. These claims might not be entirely incorrect, as DevOps and Agile are to some degree based on best practices and methodology drawn from the good experiences of many IT professionals from the days before IT became so closely tied to computer science. However, this is not something that a recent comp-sci graduate working in a company that is selling DevOps as a new spin on IT management will be content to hear.

Lack of skills and expertise

Unfortunately, in conventional operations, there is extremely tiny knowledge sharing happening. All decisions and events are tribal knowledge and not documented. There’s exceptionally small monitoring information as scripts and tools are written ad hoc and run exclusively by the authors. There is an unconstrained mixing of testing, development, and operations environments regularly with the use of direct edits. This makes it challenging to copy identical environments for development and testing, which is frequently a source of bugs and issues later within the process. At the same time, developers are not exposed to the operational issues their code introduces since of the absence of communication. With an out-of-hours support burden, frequently falls to the operations teams alone. All this acts as a major deterrent to utilizing effective troubleshooting and problem-solving and would be seen as an inability to employ right or wrong. It is quite often basically an issue of not having enough information to form the proper decision.

Developing collective understanding and consideration in DevOps can be challenging, especially for companies still working in silos with segmented skillsets. Fundamental to DevOps is the thought to treat your group as an arranged, cross-functional group and in doing so, everybody must understand the situation of everyone else engaged with the venture. Exposure is the approach of sharing information in context so that data can be used to diagnose issues, make decisions, or candor, reported at a time it can be actioned upon to improve customer experience or influence business decisions.

Integration and compatibility issues

After hearing the opinion of our peers, it is still our belief that DevOps will further facilitate software development and it is relevant to Agile Development, if not an evolution of it, and after researching deeply on this topic, we feel that it is a necessary practice for all software development and IT-oriented work and it is an effective way of achieving the goals of all previous methods of development and operations.

Interview Results Tests were conducted on two people, an agile project leader and an agile development manager; the purpose was to get feedback and see if in their recent experiences they have come into contact with DevOps and to get their opinions on it. And the result was nothing short of significant, the project leader testified that he had not come into contact with it, but after hearing the description of DevOps, he recalls times when working with developers and IT personnel, that there was difficulty in communication and problem resolution in the development process, and issues in software deployment. With the agile development manager, he said that his company is currently in progress of adopting an agile methodology and is aiming to continuously build software and develop a platform for software maintenance, his opinion of agile development aligns with the progression of DevOps and he intends to learn more on the topic and perhaps implement it in the near future.

The authors of this paper are in violent agreement that the implementation of DevOps in software development will facilitate the build process of the software, provide more efficient and rapid development, and it has been seen as a progression of the agile methodology to sustain the future of development more effectively. This has been expressed from large software companies to small start-ups, there are many case studies available testing DevOps on concrete examples, showing significant results and from our assignment interviews, we have seen that our customers have in one way or another implemented DevOps.

Conclusion DevOps is the offspring of agile software development – born from the need to keep up with the increased software velocity and it has evolved from two historic movements. The first is to systematize all development and operational activities, and the second is to use Agile infrastructure to provide the rapid IT resource and usage to the application development and in turn, this was aligned with the Information Technology Infrastructure Library (ITIL). The intersection of these two movements has produced a new practice to development and operations (a collaboration between development and operations is Infrastructure and Development – InfoDev). This new movement has been called many things, but for the purposes of this paper, the practice will be referred to as DevOps. Choosing this title, because facilitate development in an agile manner to sustain in the future. This was taken from an agile development manager in a large company building to Agile tooling for dynamic simulation and continuous maintenance.

Strategies to overcome challenges

Selecting a project pilot that is focused on tools, technologies, and the infrastructure will fast give initial wins. This could build religion within the new approach inside the organisation. Starting with an initial project that’s tiny and low risk is maybe an honest thanks to overcome the primary 2 challenges that area unit expected.

When there area unit changes that area unit one thing new, it’s traditional that it slow area unit required to exhibit there’s tangible price. By selecting a tiny low project, there’s sometimes less resistance to the amendment. The initial success will then set the direction for future efforts. A proved methodology for addressing resistance to new method or technology is to point out demonstrable improvements and success with the new approach. An indirect approach is also simpler to start with. For example, instead of attempting to re-train or convert existing staff to acquire the new skills required, take into account utilizing the new individuals who have already got these skills and re-building the team or IT workers. Redefine the same project and take a look at to duplicate it with the new team. The team can have a high likelihood of success, and it’ll be easier to demonstrate the new team’s price as a result of the recent success are going to be recent within the comparison. This approach may be easier than making an attempt to justify why the new team has to be trained in an exceedingly specific new talent, as a result of it’s an endeavor to discard the belief that the team is already competent and productive.

Conclusion

This is a bold statement but if it’s true, there are going to be a lot of organizations scrambling to play catch up in the next few years by trying to recruit some DevOps talent or by trying to re-badge their existing ‘not quite agile but certainly not waterfall’ processes as DevOps. From what we have seen in organizations already adopting DevOps, the demand for people with DevOps skills and knowledge is at an all-time high and this is only set to continue. This will eventually result in two things. Obviously it means people with the skillset will be able to demand higher salaries, but more importantly, it will lead to a big shift in University and TAFE/College IT courses as they scramble to adjust their curriculum to teach DevOps from the get-go. A recent informal Twitter survey by Jez Humble (a well-known DevOps author and presenter) asked if people would hire a recent computer science grad if they knew their stuff about version control, automated testing, and system administration. With over 50 responses, 90% of them were “yes”. A similar question asking if they would hire the graduate without these skills had only 10% “yes” responses. This tells us that in time, any IT graduate without DevOps knowledge will need to answer no to that second question.

DevOps isn’t just a fad. It’s a new way of thinking and a new way of working which will stand out as the way to deliver change (rapidly, reliably, and optimally) in application delivery for the foreseeable future.

DevOps has quickly risen to become a buzzword in the application development and delivery space. And at first glance, it appears to be little more than a trendy new name for what we already do. DevOps focuses on helping organizations to rapidly produce software products and services. However, doing so in a way that the process is repeatable, reliable and most importantly, the speed doesn’t compromise the quality. This sounds good in theory, but the big question is how and what is different to what we are already doing? From our experience, the move to DevOps is as much a culture shift as it is a process shift.